Performance Practice

of Electroacoustic Music

Gerhard E. Winkler

KOMA

The development of ever more powerful computers in the nineteen-nineties made possible the extensive use of real time processing and fostered the implementation of complex formalised concepts, the creation of network structures and the application of new forms of visualisation. Interaction became a central concern of the new digital real-time culture. As in the context of open forms, especially in the 1960s, questions of notation also came to the fore in the realm of live electronic systems. This was the background for the creation of Gerhard E. Winkler’s KOMA for string quartet, real-time scores, light-colour control and interactive live electronics.

Complex dynamic systems have played an important role in the composer’s works since the early nineties. In different ways, Winkler would install a mathematical model at the core of a relational network within a live electronic feedback system. The piece emerges through the interaction between the musicians and the system. According to Winkler, there should occur “a communication situation between man (musician) and machine (computer), where both ‘partners’ can and must react in a complex, nonlinear way (what makes communication ‘interesting’), can only be realized, when all these points are given and linked together: the musicians give signals to the computer, the computer reacts nonlinear [sic] to the signals and sends out other signals: the change of the parameters of the score and the change of the live electronic [sic] sound transformation.” [Winkler 2004, p. 1] On a symbolic level, such systems for Winkler function as nature-like world models: “I use complex dynamic systems […] not only to create a unique and challenging creative experience, but also because I have a deep interest in understanding the behaviour of these systems and their similarities with nature. We need to carefully examine the interdependencies between the smallest ecological network and global contexts to survive in a world more complex than we can imagine.” [Winkler 2010, p. 89]

In his piece Emergent for 13 players, computer-controlled synthesizer and live-electronic sound transformation from 1993 Winkler developed for the first time such a system. Winkler transcribed one possible run-through of the system after its interactive realisation into a score. The resulting “work” thus remained fixed in writing and separated from the multiple possibilities of the system. Doubts about this very concept of permanently fixating a mere variant led Winkler from 1994 on to a modified approach. [Winkler 2019, 10:30 – 15:00]

In KOMA, realised between 1995 and 1996 at IRCAM, no single variant is fixed; rather, every interaction with the system creates its unique instance. In a feedback loop the four musicians play from the system-generated notation. What is played is fed into the system which analyses the signals and controls sound transformations, changes in the system state and again the notation for the strings.

At the core of KOMA’s interactive system lies the mathematical “butterfly” model, which is part of French mathematician René Thom’s catastrophe theory. Put simply, the model describes the changes between extreme states of a system. By means of a continuous function, the algorithm allows to obtain discontinuous processes. The system can suddenly tilt from one state to another. Within the butterfly modelling there are usually two opposing states between which one can jump back and forth; between these extremes, there is an inner zone. This zone can only be reached under certain conditions and it is easy to fall out of it. It is the musicians’ task to bring the transformed sound, floating around the hall, into this inner zone.

The title “KOMA” is on the one hand an acronym for “Catastrophe Theory Organised Musical Architecture” [Winkler 2019, 34:10], but on the other hand refers to a poetic concept: “coma” is the light envelope around cometary cores stimulated by solar winds. Winkler compares the musicians in the middle of the hall with the comet’s core and the sound transformations moving through space with the luminaire cover. [Winkler, KOMA [score]], introduction]. Finally, the Greek word “coma” means deep sleep and metaphorically refers to the apparent freedom of those acting within the network [Winkler, KOMA [score]].

The four musicians are seated in the centre of the hall. The system assigns different roles to them. The role of the “leader” will be alternately assigned to one musician. The audience sits around the quartet. Six speakers are placed around the audience, a seventh speaker hangs centrally above the players. If the system is located in the extreme areas of the butterfly, the sound will be heard in the periphery and the room will be illuminated with red or blue light. If the system is in the inner zone of the Butterfly, the sound will be projected from the loudspeaker placed over the musicians and the light turns orange. Light and sound position support the leader in the task of bringing the system in the inner zone of the actual butterfly state. This idea and the changing roles of the performers anticipate the use of game strategies that would come into focus some 20 years later.

The musicians see three types of instructions on screen: a “leader score”, a “living score” and score files. The first two are generated in real time. The score files are preproduced parts in standard notation. The initial data generated by the “Catastrophe Unit” defines the starting leader whose monitor will then display the corresponding score. The leader can choose to play a glissando between a starting note and two destination notes (higher or lower). The playing of the leader will then be analysed and the data resulting from the analysis (pitch, dynamics and timbre) interpreted as control data from the “catastrophe unit”. It is used to generate the live scores and display the score files for the other musicians, for the live sound transformations, the spatial position of the sound and the light events. It is also used to introduce new target pitches and parameters in the leader score.

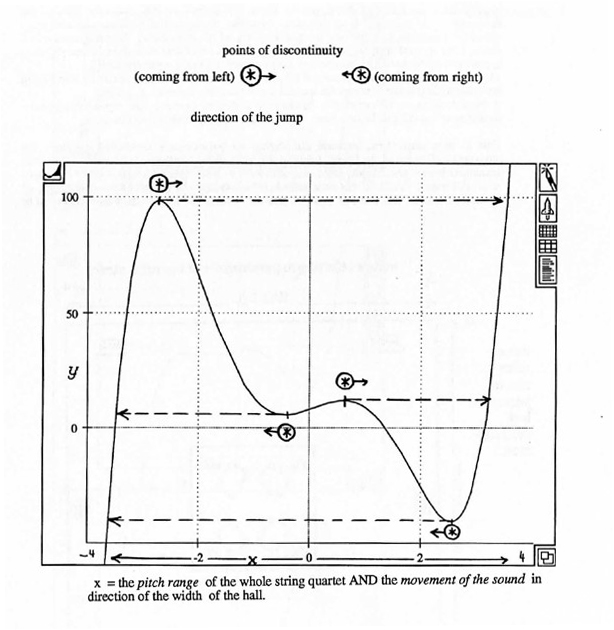

Each spatial position of the sound corresponds to a state of the butterfly model. The leader not only moves the sound around the space, but also changes the variables in the mathematical model. Three parameters, “a”, “b” and “x”, define its state and output: “a” and “b” influence the shape (curve) of the butterfly, “x” (pitch range) defines the specific position in a given curve (s. figure below). Parameter “a” is related to the timbre (“noisiness”) of the leader’s signal. Parameter “b” is generated from the difference between displayed and played dynamics. Variations of parameter “b” result in sound movements along the length axis of the space. Similarly, the pitch analysis of the leader’s signal directly controls the parameter “x” in the main butterfly and the sound position along the width axis of the space. When a so-called “macro catastrophe” occurs, (the parameter “x” reaches a point of discontinuity) the sound projection jumps between the two sides of the hall and the current leader loses his position to another player.

Fig. I. KOMA, Documentation, p. 26

In KOMA the notation of the piece shifts from the score into the system. The system itself, and not one of its realisation variants, represents the work. This is made clear when Winkler describes authorship in this context as the generation of a set of potentialities. [Winkler 2004, p. 5]

The fact that 23 years after the first performance the electronic system is no longer executable on current hardware and software configurations presents today’s performers with various problems with regard to readability, performability and interpretation. Some specific issues will be addressed in the performance report (s. below).

1) Performance Materials

a) Score and parts:

Due to the characteristics of the piece, there is no score of KOMA in the traditional sense. Instead, a documentation is provided (s. below)

As stated above, the musicians see three kinds of musical representations on the screen: “Leader Score”, “Livingscore” and “score files”

The “Leader Score” contains the following elements:

- Start pitch, target pitch of glissando up or down (related to parameter “x”)

- Deviation of start and target pitch in cents upwards

- Duration of glissando in seconds (duration of a control cycle)

- General dynamics, (related to the parameter “b”)

- Noisiness (related to the parameter “a”)

- Position of the sound in the room (width related to parameter “x”, length to parameter “b”)

The “Livingscore” contains the following elements:

- Pitch with deviation in cents, upwards

- Position of the bow on the instrument

- Playing technique and articulation

- Dynamics

- Microtonal deviations around the main tone

The “score files” are fixed and written in standard notation and were generated during the creation process of KOMA with algorithms that are also based on the butterfly model. It is the only kind of notation that can be rehearsed individually in advance.

b) Patches

1. Composer’s patch

Source: Gerhard E. Winkler (received 12 February 2019)

Date: October 1995 – April 1996

System: Max 3 for control patch and screen, DSP ISPW Next

Remarks: KOMA is composed of a collection of patches. The material was sent as a zip file containing patches, documentation, samples.

Files:

Folder name: Koma_Original

Zip file content:

1. Concert

1. Screens for players

2. Control programme (mac)

3. DSP (ispw)

2. Production

1. Mac Max patches

2. Instrumental samples

3. Processed samples

3. Documentation

1. Docs

2. Live performance

3. PatchWork lib

2. IRCAM patch

Source: IRCAM (Serge Lemouton), sent April 2019

Date: 2016

System: Max 4, Max 8

Remarks: Updated Files from Lemouton. The original files (from the premiere) had obsolete formats such as

- ISPW files

- quickdraw images

- .snd audio files

- .sd2 audio file

- .max patch in binary format

- max applications from max3

In 2016, these “historical” files were converted to more “standard” formats (namely .aiff, .maxpat .pdf and .png). The softwares used for conversions were imagemagick (version 6.9.2), sox and Max (version 7).

Files:

Folder name: Lemouton original

Zip file content:

1. Concert

1. Screens for players

2. Control programme (Mac)

3. DSP (ispw)

2. Production

1. Mac Max patches

2. Instrumental samples

3. Processed samples

3. Documentation

1. Docs

2. Live performance

3. PatchWork lib

3. ICST patch

Source: ICST (Peter Färber & Leandro Gianini)

Title: Lemouton ZHdK

Date: 2019

System: Max8 (screens: Mac Pro, OSX 10.13.6), Max 4.1 (DSP: PowerMac G4, OS 9.2), Max 3 (Control: PPC 7100/80 AV (Mac OS 9.1)

Remarks: Based on the folder “Lemouton Original”. The patch contains some updates in order to run on the setup 2019. The main updates are:

the use of fiddle~ instead of pt~ (obsolete object) in the signal detection unit.

- new implementation of the communication between control patch and screen patch via OSC (Power Book, OSX 10.13.6, Max 8)

- restauration of the objects split X, Pbank~, Readsf~, fsplit

- newly compiled bzero patcher. (Sent April 2019, Source: Serge Lemouton)

- new file formats: snd to wav, pict to png

2. Other materials

Documentation in English, German and French, self-published, 28 pages

Title: “KOMA for string-quartet and interactv[e] live-electronics – documentation”

Date: September 1995 – February 1996

Remarks: This document consists of a collection of different data and sources in different languages: the basic data; a list of “main ideas” explaining the concept and functioning of the piece, the elements of the system and the live-electronic transformation; an introductory text by Winkler in German language; technical diagrams; screenshots and explanation of leader score and livingscore; the complete score files for all instruments; “Short introduction in playing the “KOMA-Instrument”.

Technical diagrams:

- “Performance Notes” with the stage disposition of musicians, screens, loudspeakers and audience

- “System overview”: a block diagram of the components of the system

- “Details of the catastrophe unit”: block diagram of the main and the 10 sub-butterfly units

- “Details of ISPW-Organization” in French: description of the audio signal processing

- “Fiche Technique” in French: representation of the lighting system

Scores:

- Description of the elements of leading and live score

- Description of bowing actions

- Score files in standard notation: vl. 1 (2 files), vl. 2, (3 files), va. (2 files) and vc. (2 files)

The “Short introduction in playing the ‘KOMA-Instrument’” is particularly important. It describes the parameters “a”, “b” and “x” ruling the behaviour of the system and suggests ways and strategies of interacting with them. In addition, it shows and describes different states of the butterfly algorithm.

3) Reference recording

Label: ORF Edition Zeitton

Performers: Arditti Quartet

Year: 1999

Format: Stereo

SR/bit depth: 44.1/16B Duration: 35 minutes

Remarks: live recording of the premiere

Editorial Instruction

Equipment

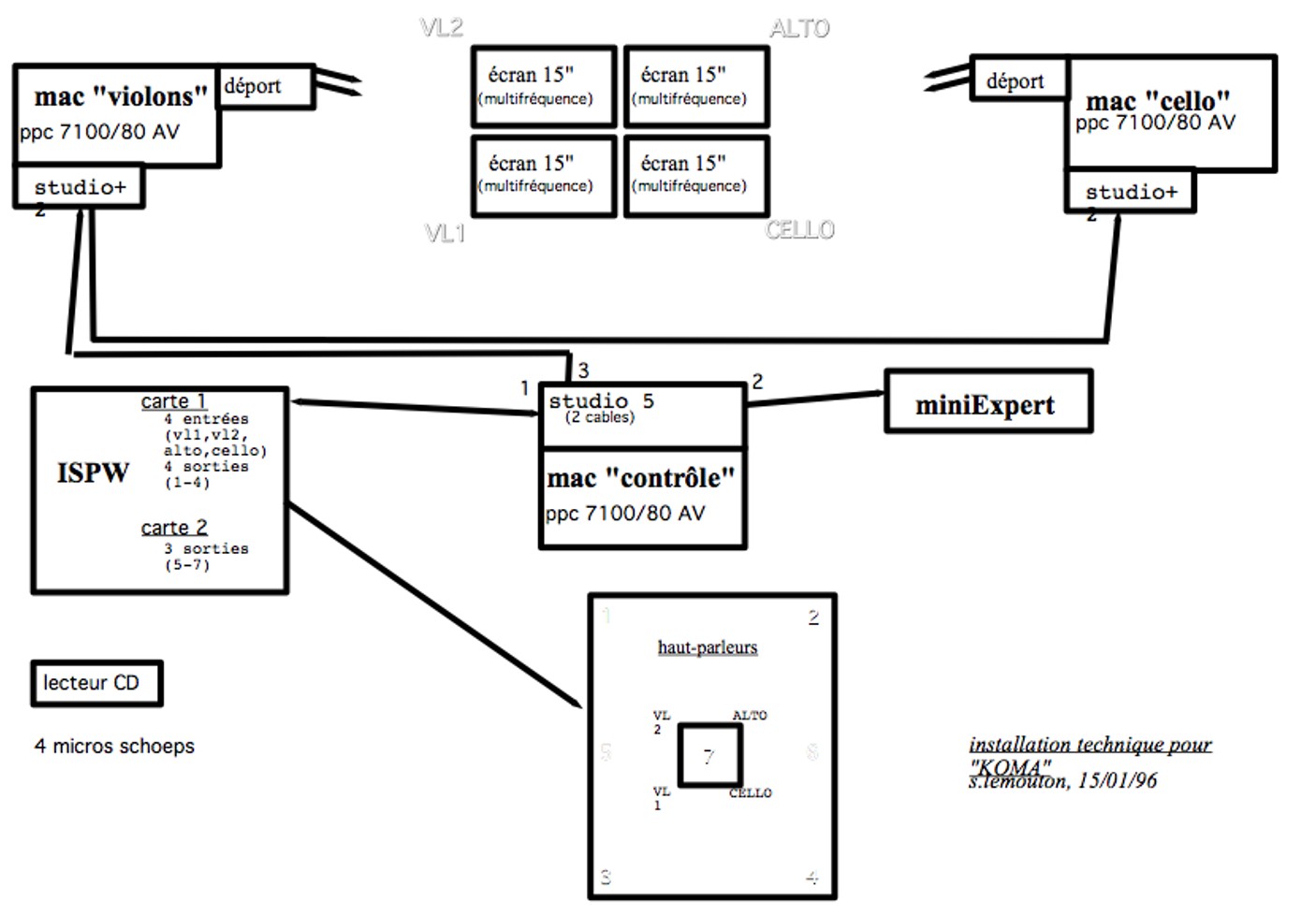

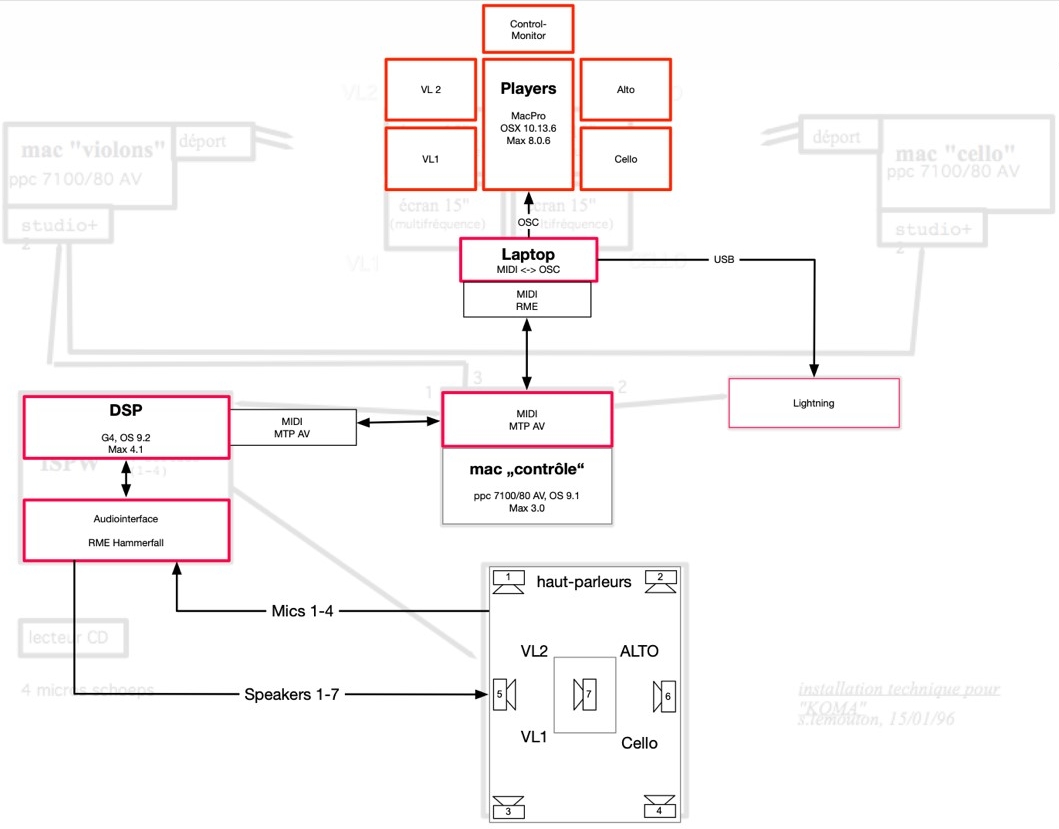

The original system involves four computers (s. figure II):

– A control computer for generation and transformation of data (catastrophe units)

– Two computers for the generation of the live score, each of them connected to two monitors

– An analysis and live electronic processor, originally a NeXT computer with 3 ISPW DSP boards.

The computers are synchronized and controlled via MIDI. Four computer screens are connected to the computers for the live score generation. A MIDI controller for the analysis and live electronic processor is required for each instrument. However, the documentation doesn’t give any suggestion about the kind of microphone or its placement on the instrument. The microphone signals are routed to the analysis and live electronic processor, where they are used to generate control data or transformed.

Fig. II. “Installation technique” by Serge Lemouton, IRCAM 1996

Disposition

The string quartet is seated in the centre of the room, the players facing each other with the audience all around and the six loudspeakers in a circle behind the audience. A center top loudspeaker is required, although described only in an additional stage plot and missing in the documentation. Each musician has a computer screen on which the scores will be displayed.

Description of the System

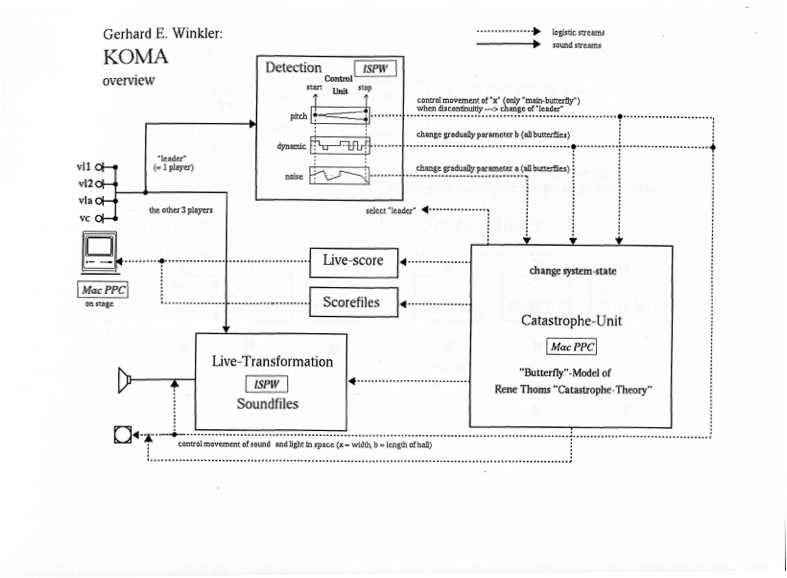

The system consists of five elements (s. figure III):

- Detection and analysis

- Catastrophe unit

- Generation of scores

- Live transformation of the quartet’s playing

- Projection of pre-produced audio files

Fig. III. KOMA, system overview, original documentation

Detection

The detection is based on the analysis of the leader audio signal. The object pt~ is used to provide information about amplitude and pitch. The amplitude is detected in a certain range. This implies that if the sound is too low or too high the detection will stop generating new data.

The amplitude is then used to gradually change the parameter b.

The pitch will directly affect the movement of the parameter x on the main-butterfly and will also be used to generate the parameter debut de note détecté (dnd). This parameter is responsible for triggering a new cycle of control data.

Originally, there was also a noisiness detector in the detection unit that would have gradually changed the parameter a. In the performance patch, this detection system was not used.

Catastrophe unit

In the catastrophe unit we find the main butterfly algorithm that will receive via MIDI the parameters a and b from the detection unit on the ISPW Next DSP processor and will then generate data for the leader assignment and for other control processes. The control processes data are then sent back via MIDI to the Next processor to control the audio transformation, to the computer nearby the musicians to control the generation of the score files and to the light system to control the intensity of the lights.

Generation of the scores

The actual score is a Max patch composed of five layers: 1. title, 2. leader score, 3. live score, 4. score file (page 1), 5. score file (page 2). According to the information received through MIDI from the control patch the corresponding layer is brought into the foreground.

Duration, pitch and dynamic for the leader score are generated in the control patch. All other parameters shown in the living score are also managed by the control patch.

Sound material

There are three sound categories in KOMA: purely acoustic sounds produced by the string instruments, pre-recorded sounds and live sound processes.

Pre-recorded samples (Sons sur disque dur)

In the samples folders we find the 26 unprocessed instrument samples that are the original pre-recorded material. (KOMA-original >2. Production >2. Instrumental samples >Sounds)

The samples are recording of different instruments as violin, viola, cello, sax and a voice. 23 of them have been processed with different transformations and are used in the patch (sons sur disque dur). During the performance they are spatialized according to the data generated by the system.

Sound processing

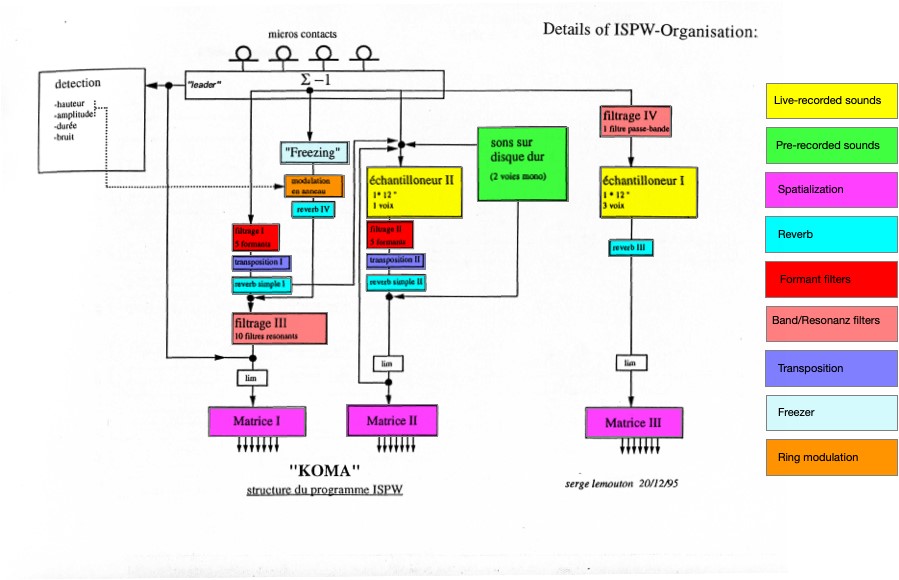

The sound processing within the ISPW involves the following processes (s. figure IV):

- Formant filter

- Resonance filter

- Band filter

- Freezing

- Ring modulation

- Transposition

- Spatialization

- Live sampling

Fig. IV. Details of ISPW organisation by Serge Lemouton, IRCAM 1995 (colour editing and legend by Leandro Gianini)

Performance report

The concert took place on 27 June 2019 at ZHdK’smain concert hall (GKS 3) with a string quartet composed of Assad Fishyan, Lorgia Loor, Anton Vilkhov and Moritz Müllenbach; Germán Toro Pérez, sound projection, Peter Färber, system engineer, Leandro Gianini, sound engineer and Viktoras Zemeckas, light technician.

KOMA had never been performed again since the premiere. Thus, the software and the documentation correspond to the technical state of that time. The original patch used for the premiere was provided by the composer. A second patch containing some updates (file formats) was received from IRCAM’s Serge Lemouton on request (s. Sources). None of the patches ran on current computers, although some files were updated to current formats.

Restoration

In the months preceding the performance we started to build the system based on these materials. A first goal was to run the software on current computers. For that, old objects had to be newly programmed and the network structure adapted. Missing information about the functionalities, and in view of the high complexity of the patch it soon became clear that it was not possible to achieve this within a reasonable timeframe. Although the most important external called “b-zero” containing the butterfly algorithm was kindly rewritten by Serge Lemouton based on old handwritten documents, the goal of running the system on current computers had to be abandoned. Instead, it was decided to set up a system resembling the original one as closely as possible in order to be able to analyse the patches and understand the function of all units. This setup consisted of the following elements (s. Fig. V and VI):

- A Power Macintosh (1995, ppc 7100/80 AV OS 9.1, MAX 3), running the original Max patch as the main control computer.

- A Power Mac G4 (1999, OS 9.2, Max 4.1) for sound analysis and processing running the updated patch by Serge Lemouton on Max 4, instead of a NeXT computer with ISPW DSP boards.

- A laptop at the FOH connected via OSC to a MacPro (2014, OSX 10.13.6, MAX 8.0.6) on stage with 4 monitors outputs as the score generating computer

Fig. V. Network structure by Peter Färber, ICST 2019 superimposed onto the original “technical installation”, IRCAM 1996

Fig. VI. Setup at ICST 2019 combining historical and contemporary hardware.

Again, the analysis process proved more demanding than expected. A full re-programming of the system for current (2019) computers would have been far too time-consuming. Therefore, the provisory analysis system described above was improved and used for the performance.

Amplification

In a first rehearsal stage DPA 4099 were used on the string instruments. Later on, Schertler pickups were used instead to minimise the leakage from other instruments and from the electronics. In fact, the dynamics of the instruments and the electronics can differ greatly and change suddenly. Some instruments can be very loud and others very soft at the same time.

Moreover, gain staging is crucial for the signal analysis controlling the processing. The gain of the leader had to be constantly adjusted slightly in order to be equal on the whole pitch range. With the Schertlers applied on the body of the instruments, some frequencies would result louder than others. This problem was reduced with a different pickup placement, applying some equalisation and with live gain adjustment. A meter was added in the upper layer of the patch in order to be able to constantly check the input level used for the analysis.

The six speakers used where K&F Gravis 15 W, the centre top speaker was an L-Acoustics 108 P.

Monitoring

The circular disposition with the ensemble playing in the centre of the venue didn’t require an audio monitor system.

A remote desktop viewer was added for the computer generating the scores. With this it was possible to see from the FOH the scores of the musicians and react when the score system wasn’t working properly. This was also very useful during rehearsals to help the communication.

Lights

Despite the technical description there is no information on what the original lighting looked like. Although the composer and the original sound engineer were present during our rehearsals, they could not provide any additional information. We had to adapt the light setup to the new space trying to be consistent with the overall concept.

The MIDI values controlling the lights had to be slightly adjusted, since the LED technology used in the hall presumably behaved differently from the spots used for the premiere at IRCAM. Indeed, the dimmer function in LED devices could react very differently. The main issue was that the difference between attenuated light and full light was too small. In order to increase this difference, we scaled the MIDI data logarithmically instead of using them directly to obtain more differentiation.

Rehearsals and performance

In order to test and understand the behaviour of the system without the musicians, we programmed a simulator of the leader using sinus tones. Obviously, the acoustic result of the simulation was incomplete and could just give a partial idea of the piece. In fact, the live transformation is affecting all the players. Nevertheless, the simulation proved to be a useful tool for test and development purposes.

Due to the technical difficulties and uncertainties as well as the singularity and unpredictability of the system the musicians had to overcome several difficulties during the rehearsal process. Some of the general and specific challenges were:

- The lack of a way to rehearse individually without having the whole system running and all musicians in place

- Reacting immediately to a non-conventional, very detailed and sometimes very quickly changing notation on the screen

- The realisation of extreme dynamic information (between pppppp and ffffff) in the given musical situation

- The synchronisation and scaling of simultaneously moving curves for dynamics and microtonal detuning

The main challenge for the performers, however, was to adapt the score information to the overall musical situation and to interact not only with the system but with the other musicians and the sounding environment as well.

Moreover, becoming aware and reacting to the sound position and the light proved to be very difficult. At the time of the premiere, the composer asked the musicians to navigate the system aiming to move the sounds to the centre of the stage. This task proved to be too complex and for the Zurich performance the composer suggested that the musicians just navigate freely through the system.

Besides taking care of the gain control and the balance of the sound projection, the live electronic performers had two additional tasks: to react to the tendency of the system to prioritise the first violin as a leader, re-distributing this role among all musicians. A corresponding feature was added to the MIDI controller of the G4 processor; in addition, the electroacoustic performers had to manually adapt the parameters “a” and “b” in view of a varied and dynamic performance without interfering too much with a system intended to run autonomously. This corresponds to the approach taken in the premiere performance. According to the composer, controlling parameter “a” was necessary in any case, since at the time the analysis of the timbre (“noisiness”) had first been implemented, but was finally abandoned for technical reasons.

The live electronic performer has at his/her disposition in the patcher Controles-LIVE some parameters already assigned to a MIDI fader controller.

Fader 1: Spat 1 gain (Spat1-gain)

Fader 2: Spat 2 gain (Spat2-gain)

Fader 3: Spat 3 gain (Spat3-gain)

Fader 4: Parameter a (simul-a)

Fader 5: Parameter b (simul-b)

Fader 6: Ring modulation volume (ringVol)

Fader 7: Sound files volume (sfVol)

The faders are mainly controlling the volume of the sounds projected through the speakers in order to be able to control the overall balance. The controls for parameters a and b on the other hand are more articulated. By moving the fader, a value is sent to the patcher, where the parameters a or b are calculated. This value will be added to the calculation process and will allow to the electronic performer to control the direction (positive or negative) of the upcoming generated parameters. Is important to realise that the controls for a and b are not direct, but indirect. A completely new generation of control data is necessary for the change to be effected.

These challenges and interventions can be seen as alien or even contradictory to the original concept. Nevertheless, they raise questions about the limits of musical autonomous systems, going beyond mere technical issues.

KOMA doesn’t have a composed end. The live electronic performers just stop the system and the musicians will finish playing individually. The composer suggests 20-25 minutes as approximate duration. After that the system would start exhibiting an increasingly redundant behaviour.

According to Winkler, the piece could also be realised as a performative installation. In that case the audience could come and go freely and KOMA could last much longer.

Winkler, Gerhard. E. (no year): Werk- Dis- Kontinuum. Zum musikalischen Werkbegriff des Real-Time-Score. Online: http://gerhardewinkler.com/wp-content/uploads/Hybrid2-Meta.pdf (last accessed: 1 December 2019).

Winkler, Gerhard E. (no year): KOMA (score), introduction, [no pagination, no year].

Winkler, Gerhard E. (2019): Talk on KOMA. Held at ZHdK, 26 June 2019.

Winkler, Gerhard E. (2013).: Spielend navigieren. Musizieren in den Möglichkeitsräumen des Real-Time-Score, in: Gratzer, Wolfgang & Neumaier, Otto (eds.): Arbeit am musikalischen Werk. Zur Dynamik künstlerischen Handelns. Freiburg, Berlin, Wien: Rombach, 2013, pp. 267–278.

Winkler, Gerhard E. (2010): The Real-Time-Score: Nucleus and Fluid Opus. In: Contemporary Music Review, 29 (1), pp. 89–100.

Winkler, Gerhard E. (2009): …nur erst einige Bewegungen, als Buchstaben der Natur… Computersimulation und musikalische Komposition. In: Hiekel Jörn Peter (ed.): Spannungsfelder. Neue Musik im Kontext von Wissenschaft und Technik. Mainz: Schott, pp. 44–55 (Veröffentlichungen des Instituts für Neue Musik und Musikerziehung Darmstadt, 49).

Winkler, Gerhard E. (2004): The Realtime-Score. A Missing Link in Computer-Music Performance. In: First Sound and Music Computing Conference, SMC´04, IRCAM, Paris, 2004, Conference Proceedings, pp. 9–14.

Winkler, Gerhard E. (1993): “Den Zufall dynamisieren…” Beobachtungen am Rande der Autopoiëse. In: Zwischen-Ton, Summer 1993, pp.4–8.

Ender, Daniel & Winkler, Gerhard E. (2009): “Ich arbeite gerne mit Widersprüchen.” Gerhard E. Winkler im Gespräch mit Daniel Ender. In: Österreichische Musikzeitschrift, 64 (10), pp. 48–50.